Multi-node Cluster

Overview

The Kloudspot software stack can be run on a High Availability Kubernetes cluster (including 3 or more compute nodes). As with a Single Node Install, these instructions assume the use of MicroK8S however similar approach should work with other K8S installations.

The primary requirement is that the underlying hardware must itself be highly available - no shared power, networking or physical components.

Please refer to the the MicroK8S documentation for background to these instructions.

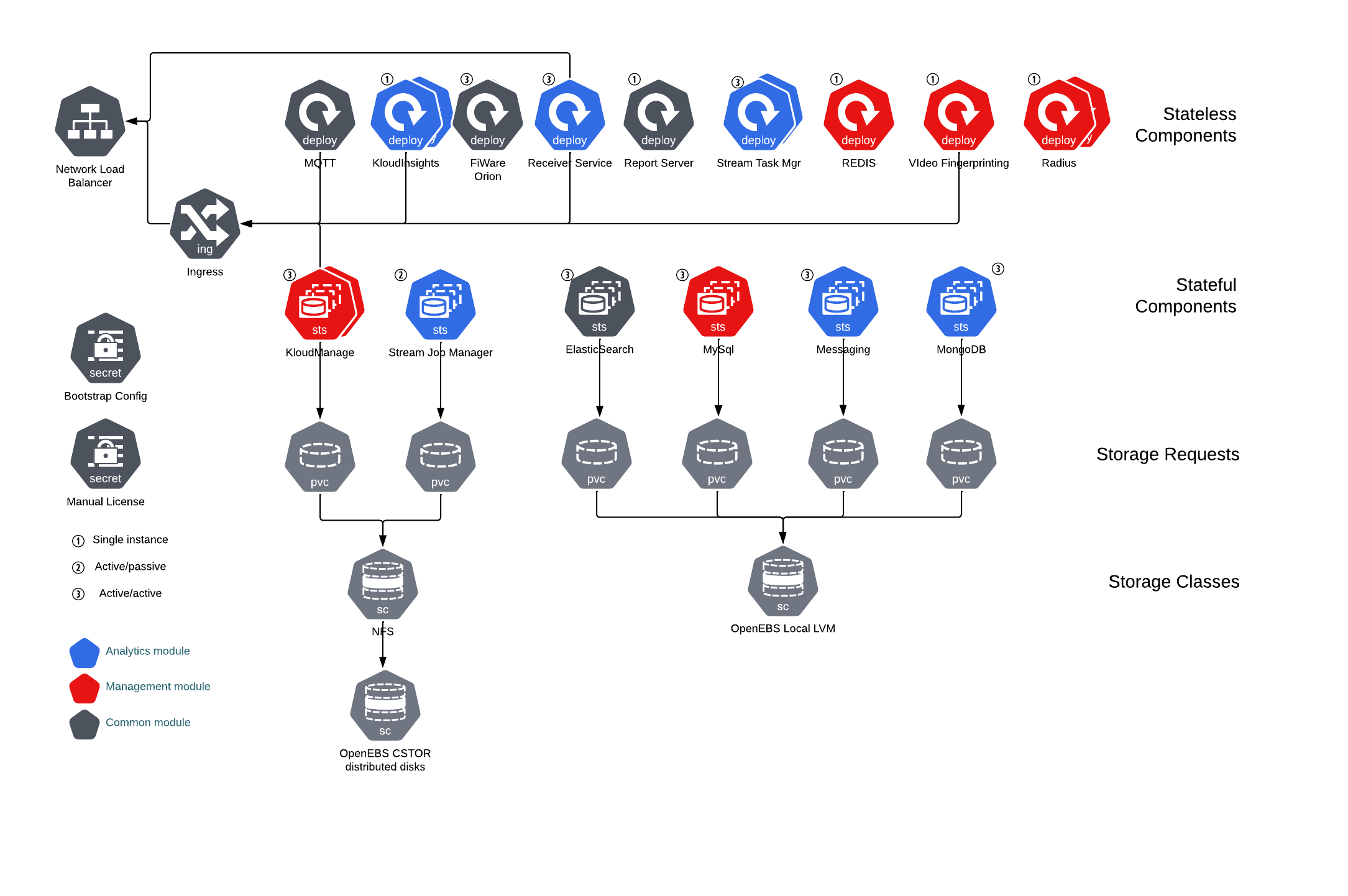

The cluster will be configured as follow:

Access to the cluster is via a single virtual IP address shared by the cluster and exposed by a network load balancer managed by MetalLB.

There are three types of component in the architecture:

- Stateless services with no shared storage (eg report generator)

- Stateful services with LVM storage on each node (eg MongoDB)

- Stateful services with OpenEBS cStor shared storage (eg Flink job manager).

If any node fails, the following happens:

- Stateless components will fail over to other nodes.

- Stateful components with LVM storage will continue to operate with degraded availability.

- Stateful components with shared storage will fail over to another node.

When the failed node comes back up:

- Stateless components will rebalance if necessary.

- Stateful components with LVM storage will restart, automatically resynchronize and start operating with full availability.

- Stateful components with shared storage will rebalance if necessary.

System requirements

Any system used needs to support the AVX flag - most newer bare metal systems will support this. VM servers often don’t by default. Please refer to your VM server documentation.

Each node should have a minimum of the following specification:

- 8 core

- 32 GB RAM

- 1 x 1TB SSD configured using LVM with 100 GB assigned to

/ - Ubuntu 22.04 Server image

The recommended spec when running both KloudInsights and KloudManage is:

- 16 core

- 64 GB RAM

- 1 x 1TB SSD configured using LVM with 100 GB assigned to

/ - Ubuntu 22.04 Server image

Three nodes are required for a system to be able survive node failure, however if there is a heavy load on the system, one or more worker nodes may need to be added to the cluster to provide extra capacity.

Before you start the steps below, please obtain the following:

- A static IP Address for each node

- A static shared IP Address to use for the load balancer

- A DNS entry for the shared IP address

- A TLS certificate and key to use for the shared IP address (recommended)

Configure Each System

Install Ubuntu 22.04 on each system, and then update the system to the latest libraries:

sudo apt-get update

sudo apt-get -y upgrade

Take the defaults for any questions

Reboot, then install the Kloudspot tools using the following command:

curl -s https://registry.kloudspot.com/repository/files/on-prem-cluster.sh | sudo bash

Logout and log back in again.

Then:

At the point you have a configured system that you can use to create clones.

If you do this please refer to this reference to change the machine-id.

Also make sure to configure the IP addresses correctly on each node.

Configure The Cluster

Configure Storage

Two types of storage are used for the installation:

- Shared - allocated from a OpenEBS cStor shared filesystem.

- Unshared - allocated from a LVM volume group using the OpenEBS LVM provisioner.

There needs to be enough free storage available to satisfy both needs. You can use the ‘kloudspot storage’ tool to review the available storage and estimate the required storage.

kloudspot@nmsc02:~$ kloudspot storage estimate

Assuming a 3 node cluster installation

Openebs not installed

? Do you want to install Openebs? : [? for help] (y/N)

installing OpenEBS...

Successfully installed OpenEBS.

All Pods are UP now...

Volume Groups:

- vg_data ( 93.1 GB - 87.1 GB free)

on /dev/sdb1

- vg_share ( 106.9 GB - 0.0 GB free)

on /dev/sdb2

Total Free space: 87.1 GB

Disks:

/dev/sda (50G)

/dev/sda1 (1M)

/dev/sda2 (50G) mounted as /

/dev/sdb (200G)

/dev/sdb1 (93.1G) in Volume Group vg_data

/dev/sdb2 (106.9G) in Volume Group vg_share

/dev/mapper/ubuntu--vg-shared (100G)

What features do you want to use

? Enable KloudManage : No

? Enable KloudInsights : Yes

? Enable Kloudhybrid : No

Using vg_data for unshared volumes

Available: Shared 106 GB, Unshared 87 GB

How much storage do you want to assign to each volume

? Stream processing elasticsearch (GB) : 10

? Stream processing state storage (GB) : 10

? Kloudinsights database (GB) : 10

? Kafka distributed messaging (GB) : 2

? Zookeeper coordinator (GB) : 2

Required: Shared 20 GB, Unshared 14 GB

The configuration looks OK

Remember each node needs this amount of storage

Prepare Kloudspot Configuration

You can use the ‘kloudspot init’ command to create a configuration file for your new system. It will ask a few questions and then create a ‘values.yaml’ file with the necessary configuration.

If you want to use your own StorageClass configuration, then please refer CustomStorage

sjerman@k8s-single:~$ kloudspot init

installing OpenEBS...

Successfully installed OpenEBS.

All Pods are UP now...

# If you have multiple bd on a node , select appropriate bd from list:

which blockdevice do you want to use for node cluster1 ? blockdevice-b168c57f62054cfea8ee52cbde230d77

which blockdevice do you want to use for node cluster2? blockdevice-124ba28ef2874c3aa2a94967ccda6000

which blockdevice do you want to use for node cluster3 ? blockdevice-04f218481b2c48b3aa2dd1f1767c4823

Waiting for all CSPI UP...

CSPI up now!

Initialize Kloudspot System Configuration

By default, the ingress controller will

use a self signed certificate. It is much better to

use a ‘proper’ SSL certificate.

Do you want to add ssl certificate? y/n

? Enter ssl key filepath: server.key

? Enter ssl ssl cert filepath: server.cert

Initialize Kloudspot System Configuration

First basic system information...

? DNS Hostname dibble.net

? Customer Reference steve

What features should be enabled

? Enable KloudManage No

? Enable KloudInsights No

? Enable Kloudhybrid Yes

Using ubuntu-vg for unshared volumes

Available: Shared 32 GB, Unshared 49 GB

How much storage do you want to assign to each volume

? Stream processing elasticsearch (GB) 10

? Kloudinsights database (GB) 10

Required: Shared 0 GB, Unshared 20 GB

'/etc/kloudspot/values.yaml' created sucessfully.

Deploy Kloudspot Helm Chart

Deploy the helm chart using:

kloudspot start

The deployment will take a while to complete, use following commands to monitor:

microk8s helm3 status kloudspot

microk8s kubectl get all

kloudspot status

Update the deployment

kloudspot update --update-helm

Uninstall the deployment

kloudspot stop

See here for general instructions on using Helm